Singularity is the notion or hypothesis that artificial intelligence will trigger runaway technological advancements and unbridled changes to humanity — many positive but many dangerous.

In other words, machines will overtake humanity and artificial intelligence will begin to write its own computer codes without human help. And each new generation of AI will be much smarter than the previous one until this intelligence explosion will far surpass all human intelligence.

Sci-fi writers and filmmakers have been entertaining us with these notions for at least a century. But now it appears on the horizon. For example, the average IQ in North America is about 100 and Albert Einstein’s IQ was in the 170-200 range. But within 8-10 years, some claim enhanced computing power and AI will be producing machines with IQs around 10,000. Who knows if it will happen?

But we’re already living with many early AI applications. Things like Netflix accurately predicting which films we will like based on previous reactions and choices of movies. Or, Apple’s Siri, the friendly voice-activated computer that gives us directions, adds events to our calendars or provides recipe suggestions.

These pseudo-AI applications are so rudimentary compared to what’s coming they might as well be Neanderthals to today’s human race.

The AI optimists point to all the wonderful things in store for us. Things like solving life’s most complex problems surrounding the environment, aging, disease, poverty and famine. These super-human machines could even push us to deep-space exploration and new worlds and galaxies light years away.

Ultimately, according to AI proponents, what once took years and years of human trials and errors will happen in seconds, and the machine-god will emerge.

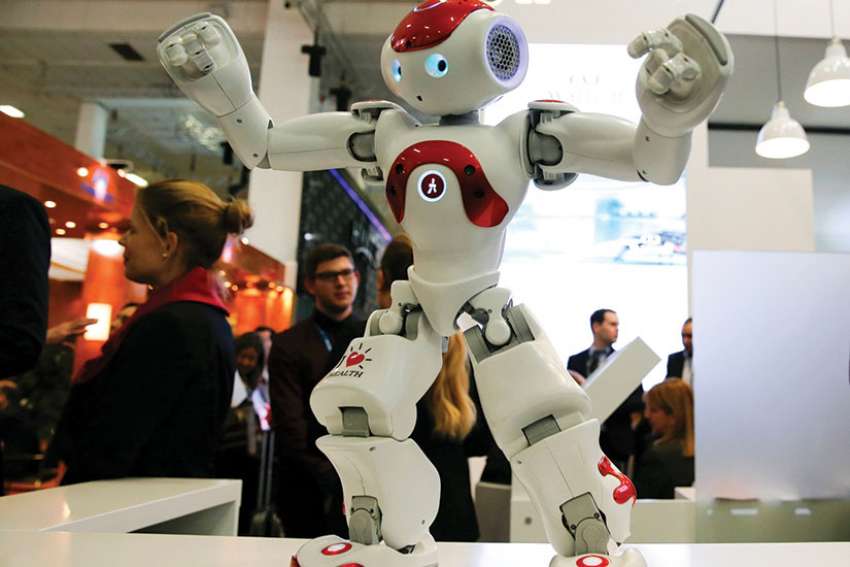

But at what cost? The immediate cost will be job losses and human displacement in the workforce by more efficient robots. The longer-term costs could be even greater, such as human subordination to far superior intellects.

In July, Tesla and SpaceX CEO Elon Musk — a man who knows a thing or two about AI — took heat from some technology luminaries for publicly musing about the dangers. “AI is a fundamental existential risk for human civilization,” he said.

Musk’s concerns are valid and well worth talking about before it’s too late. They also got me thinking about faith and God. If this superintelligence can somehow solve the most complex problems, could it ultimately be able to unravel the greatest mysteries? The things we hold dear in the Apostles Creed and Nicene Creed, for example.

Looking to find more information, naturally I employed some artificial intelligence and spoke into my phone with this command: “OK, Google. Singularity and faith.” Lo and behold, a vast array of material popped up from scholars, religious figures and deep-thinking geeks. Not only that, but I read about a quasi-religion in California’s Silicon Valley called “Singularity” that predicts 2045 to be the year when humans will build the last machine and, effectively, hand over everything to AI.

“The Abraham — or perhaps John the Baptist — of this faith is Ray Kurzweil,” writes Bryan Appleyard in Britain’s The Spectator. “Kurzweil has long been the hot gospeller of the future. As with all futurologists, his forecasts have proved more often wrong than right. Yet he is a marketing genius and that has led to him being lauded by presidents and employed by Google to work on artificial intelligence. … It is Kurzweil who chose the date of 2045 for the advent of the Singularity and who has been the final machine’s most effective disciple.”

Kurzweil may get things wrong more often than right, but it certainly feels like we’re moving into a world envisioned by writers like Aldous Huxley, H.G. Wells and George Orwell and the creators of Star Wars, Star Trek and a host of others.

But AI is uncharted waters. There is no history to compare it with. We don’t know what will spring from it. The adage “standing on the shoulders of giants” or discovering truth by building on previous discoveries of great men and women before us, cannot apply because the human experience will be extracted from the AI process and development.

The only model for such exponential acceleration is the growth in power of computer chips — Moore’s Law that states computing power doubles every 18 months. Moore’s Law may or may not continue, but even if it does let’s hope and pray machine intelligence will be harnessed by humans, not the other way around.